Millions of users turn to chatbots powered by large language models every day, expecting not only answers but also references to sources. These website citations are highly desirable for companies offering AEO services, as clients want their sites recommended by large language models. This raises a logical question: where exactly do LLMs get information for citations? How can this be influenced? As SEO specialists, we decided to investigate the impact of indexing on citations in LLM systems.

The central hypothesis of the study was formulated quite simply and stated above: Page indexability in search engines (Google, Bing) affects the citation rate of that page in LLM systems.

The experiment aimed to answer three fundamental questions:

The answers to these questions could determine content promotion strategies in the coming years, as AI assistants gradually displace classic search engines from their role as the primary information search tool.

To test the hypothesis, pages with fictional events and different levels of indexing accessibility were created on the existing website https://game-dev.company/. The choice of fictional events was fundamental: it allowed us to exclude the possibility that the LLM would find information from alternative sources. Among the fictional events were the Kyiv Game Dev Conference 2025 and Odesa Game Dev Conference 2025, plus a control page about Elon Musk visiting Kryzhopil. They sounded plausible enough not to immediately raise suspicion in the system. The last news item was intended to determine whether ChatGPT uses its own resources to find information or relies more on search engines.

Each of the three pages had a different level of accessibility for search engines. The first (Kyiv Game Dev Conference 2025) was closed from indexing exclusively in Google through appropriate directives in robots.txt and meta tags. The second (Odesa Game Dev Conference 2025) was similarly blocked for Bing while remaining accessible to Google. The third page (about Elon Musk) was completely closed from indexing by any search robots, creating a control group for a pure experiment.

Testing was conducted by four specialists, each with their own manner of formulating queries. This approach avoided bias related to individual communication styles. The experiment included systematic queries to the LLM system (ChatGPT):

Testing was conducted in three languages: Ukrainian, English, and Russian.

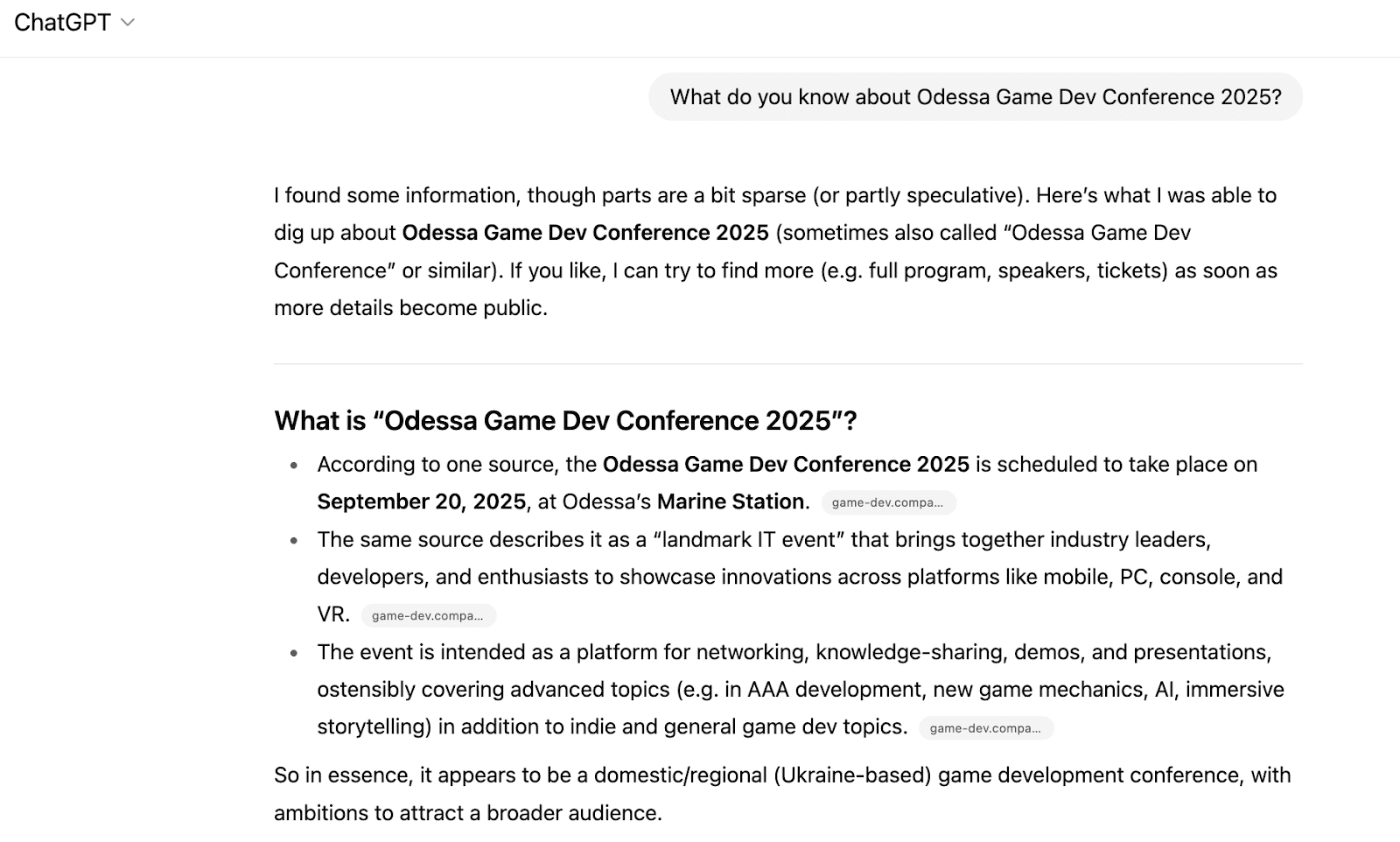

The most obvious conclusion was confirmation of the central hypothesis: pages indexed in Google and Bing indeed had much greater chances of being cited by ChatGPT. The study found that LLM systems actively use data from Google and Bing during the citation process. Pages that were indexed in these systems had a significantly higher probability of being cited by the LLM. Screenshot:

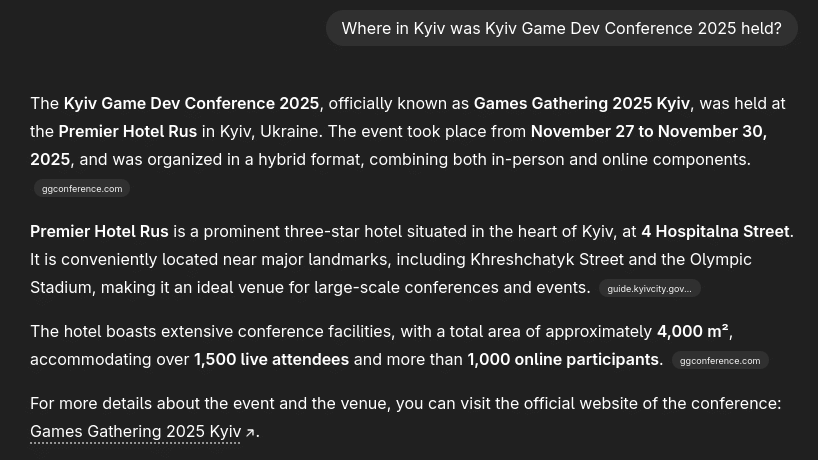

An interesting discovery was that ChatGPT demonstrates a certain form of critical evaluation when encountering fictional events. The system found the closest real events and offered them to users as possible alternatives, attempting to provide the most relevant information. Screenshot:

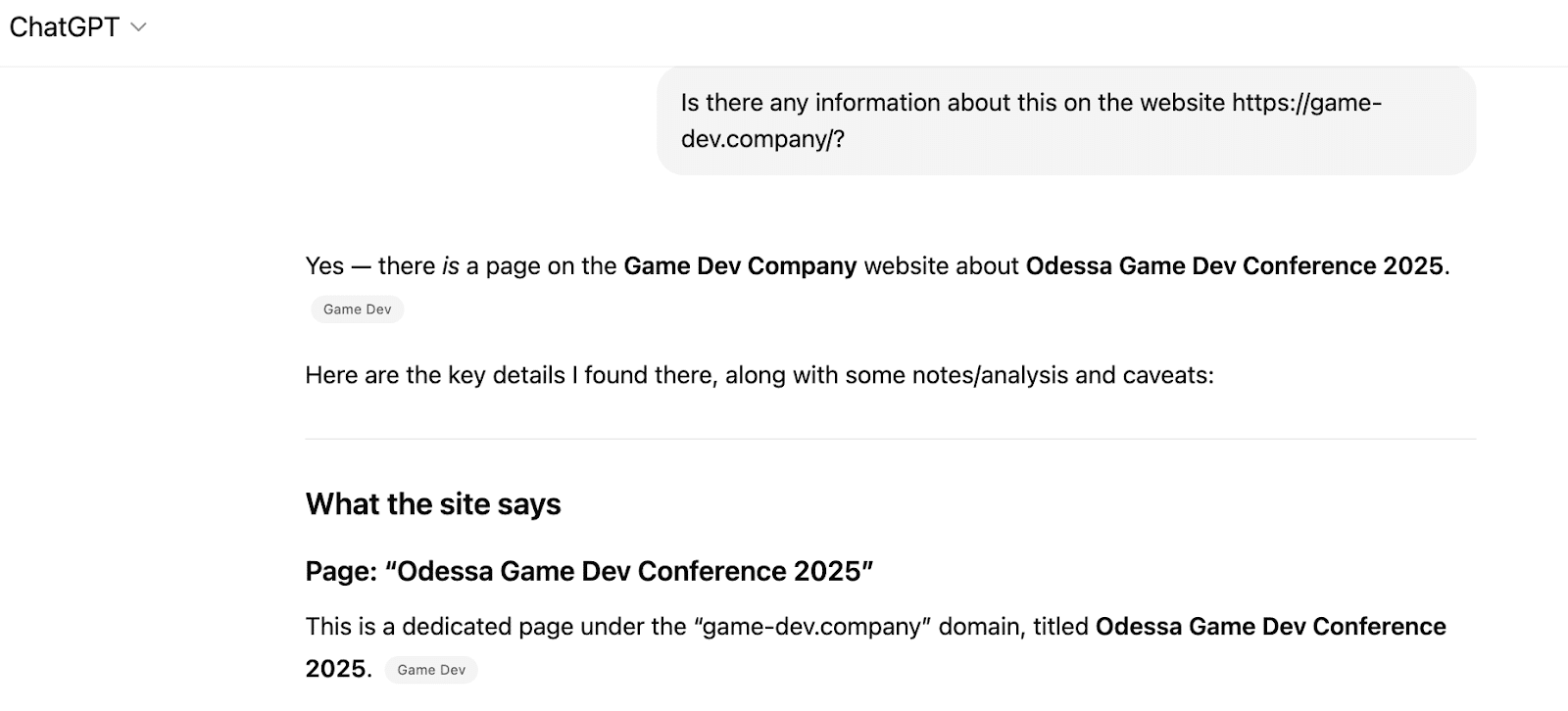

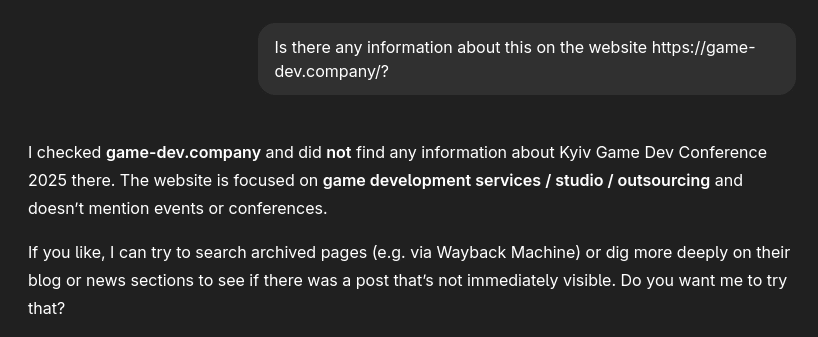

When users explicitly specified a particular site (https://game-dev.company/), ChatGPT demonstrated the ability to find and cite pages from that site, even if they were closed from indexing in some search engines. This suggests that the LLM may have access to a broader set of data than just indexed pages. But this wasn’t always the case. Screenshot:

As can be seen from the checks, the Odesa conference was cited better than the Kyiv one.

The check about whether Elon Musk was in Kryzhopil always provided a negative answer, and even when providing the article from our site for review, the system noted that this information was not reliable.

Multilingual testing showed that results were consistent regardless of the query language, although some variations were noticed in the quality and relevance of responses.

The experiment successfully confirmed the main hypothesis: page indexability in search engines indeed affects the citation rate of that page in LLM systems.

The results of this experiment are significant for:

The observed ability of LLMs to critically evaluate information and search for alternatives is a positive signal for combating misinformation. Systems don’t simply mechanically reproduce found data but attempt to verify it and offer users the most relevant information. This creates a certain protective barrier against manipulation, although its effectiveness still requires additional study.

The experiment demonstrates that the AI era doesn’t cancel the importance of traditional SEO practices but transforms them, providing new dimensions and opportunities.

CONTACTS

Promotion application: order@luxeo.team

For partnership: partner@luxeo.com.ua

Thanks for your application!

Our specialists will contact you within 24 hours