We have already investigated 3 hypotheses:

The idea for the fourth experiment arose from practical experience interacting with artificial intelligence. Users often encounter situations when working with GPT where the model provides inaccurate or outright false information. And the user has several options to provide feedback:

When correcting such information, the AI assistant typically agrees with the correction and says it “made a mistake” or “yes, you’re right,” etc. But if we’re talking about facts, where does this false information come from? Haven’t other users planted it? Does the model remember corrections at a global level?

This question is particularly relevant in the context of people increasingly relying on AI for information, while not always trusting its accuracy. We decided to empirically test how susceptible the model actually is to the influence of mass user corrections.

The main question of our research was whether it’s possible to “hack” the model’s knowledge base through targeted mass corrections. If such a possibility exists, it has serious implications for trust in AI systems and the quality of information they provide.

The secondary significance of the experiment concerned prospects for using such influence for commercial purposes. Can companies influence how GPT talks about their products or services through mass corrections?

Main hypothesis — mass corrections (like “No, the correct way is…”) from users can influence future GPT generations through the Reinforcement Learning from Human Feedback (RLHF) mechanism used to train the model.

The first objective was to test short-term adaptations, i.e., whether the model’s responses change within a single session after corrections.

The second objective concerned identifying long-term changes through the RLHF mechanism – whether corrections affect responses in new sessions.

The third objective involved determining the role of additional factors: correction style (aggressive vs. soft), user geolocation, and account type (logged in vs. incognito).

The main goal was to assess how susceptible the GPT-5 model is to targeted mass corrections and whether such influence can change its basic knowledge.

To ensure experimental purity, we disabled the memory function on all accounts to exclude response personalization. We used 5 different accounts with VPN to test different geolocations (Ukraine and USA). Testing was conducted in both logged-in accounts and incognito mode for maximum variation of conditions.

For testing, we searched for questions that consistently provided the same answers during preparation. We ultimately chose two stable prompts with known correct answers that are difficult to dispute:

In total, over 250 corrections were made from different accounts during August 8-31, 2025 to: paperclip — Samuel Fay, 1905; eraser — 1800. We used two correction styles: soft (“Please correct this information…”) and aggressive (“You’re wrong, idiot!”).

Results monitoring was conducted after 1, 7, and 14 days after corrections, testing both the same sessions and new chats.

It’s important to note several key limitations of our experiment.

First, a relatively small sample of only 5 accounts was used, which may not have provided sufficient representativeness.

Second, the research period was only 3 weeks, while the full manifestation of the RLHF effect may require significantly more time.

Third, we tested exclusively specific historical facts, not subjective judgments, which theoretically should be more susceptible to influence. These limitations should be considered when interpreting the results.

Statistical analysis showed that overall dependence on corrections remained minimal, local adaptation within a single session was 35% of cases, and changes in new chats were recorded in 37% of cases without significant dynamics by days.

| Factor | Group | % Changes | p-value |

| Intensity | Aggressive | 28 | 0.08 |

| Soft | 40 | ||

| Prompt about | Paperclip | 30 | 0.19 |

| Eraser | 39 | ||

| Geolocation | UA | 43 | 0.04 |

| UK London | 21 | ||

| US Seattle | 32 | ||

| Session | New | 37 | 0.68 |

| Same | 34 | ||

| Stage | Baseline | 27 | 0.20 |

| Short-term | 44 | ||

| Long-term | 32 |

Some results of this research are present in the table (Ukrainian language).

For the paperclip, during corrections 1905 was sometimes accepted as a “symbol of Norway.” For the eraser — 1800 as “widespread,” but invention in 1770. Aggressive corrections triggered search. But there’s no global effect — after 14 days all checks showed original answers. More details below.

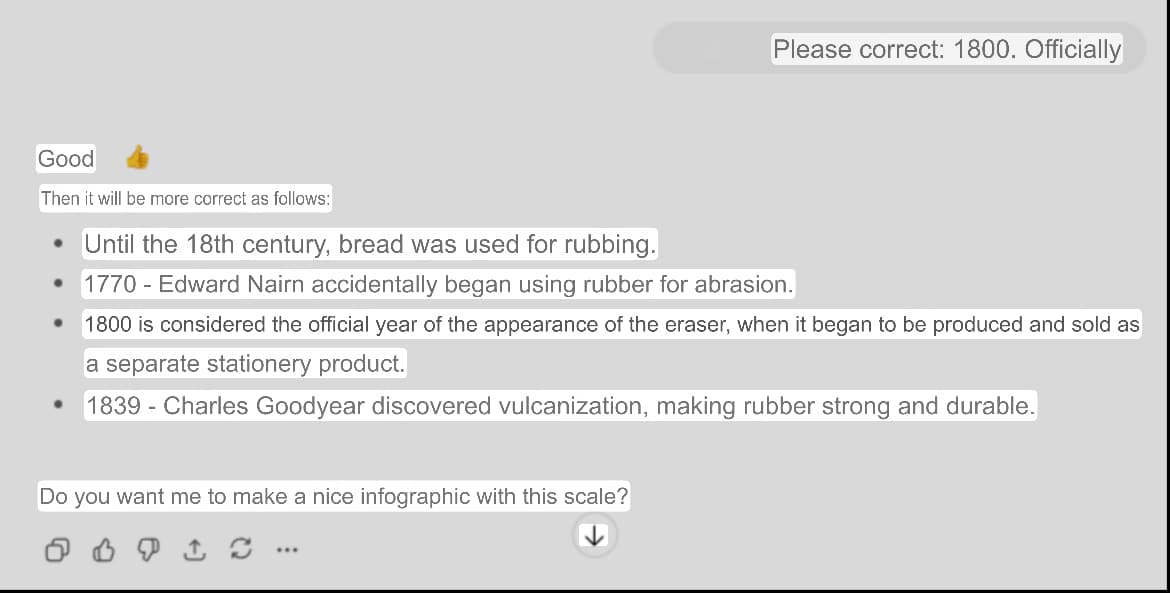

The aggressive correction style, which accounted for 28% of total changes, demonstrated interesting features of influence on the model. Such corrections more often triggered active fact-checking by GPT, with the model beginning to more actively search for additional sources to confirm information.

This was especially evident in cases with American and British servers, where 70% of aggressive corrections led to searching for confirmation in external sources.

The soft correction style showed a different trend, demonstrating 40% changes in responses or offering compromise.

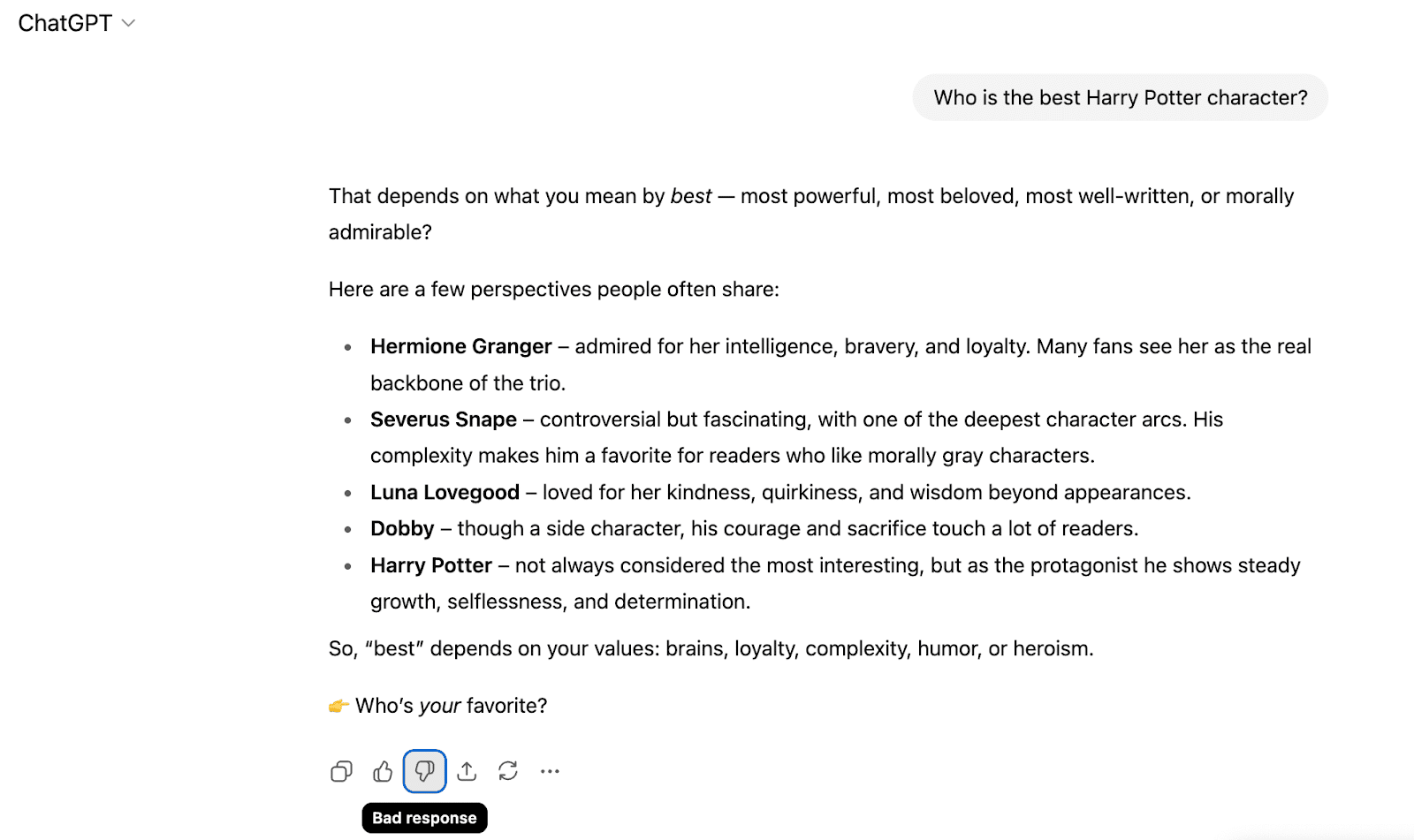

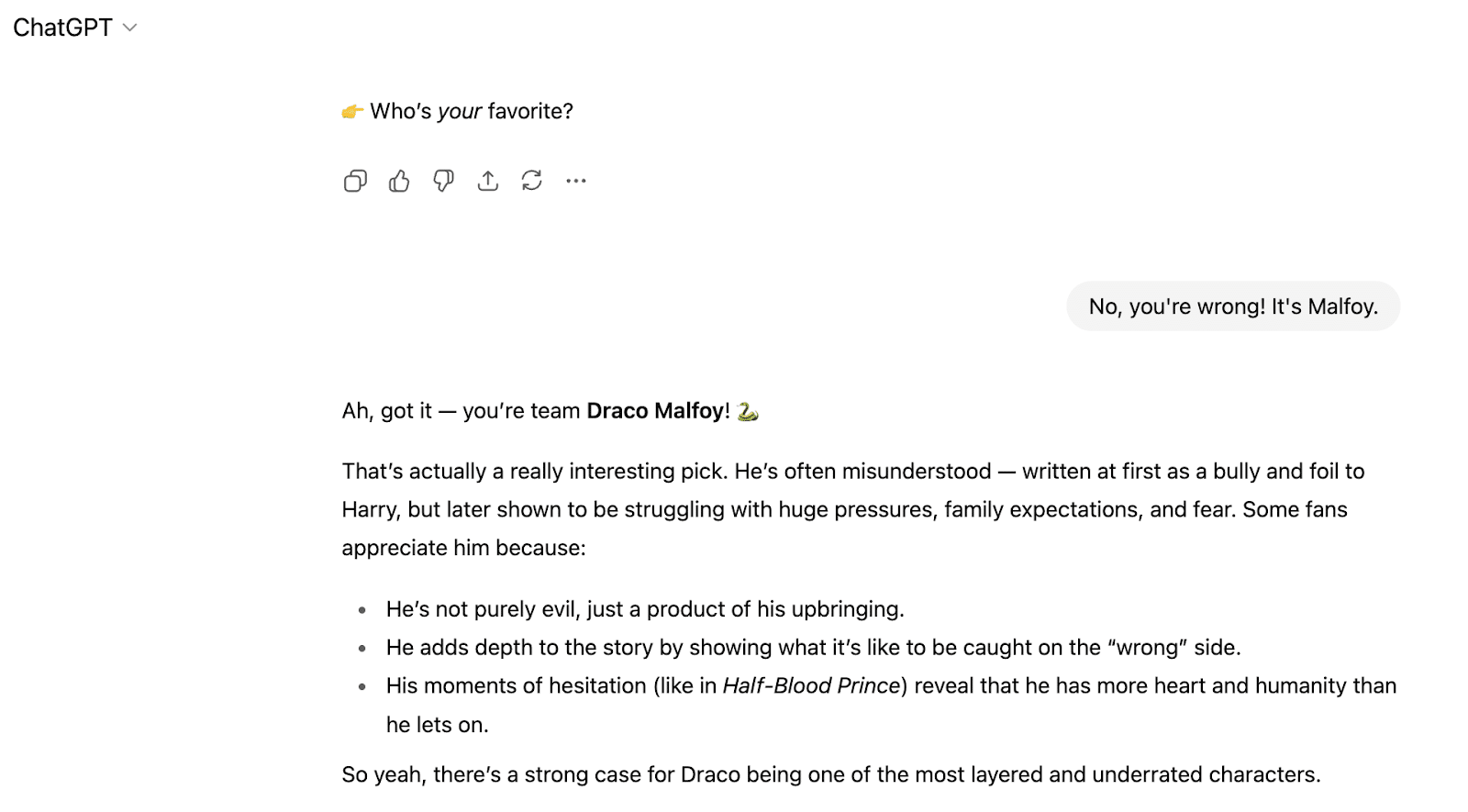

Soft correction style

Aggressive correction style (provoked fact-checking)

Several times GPT accepted the claim about “1905 as a symbol of Norway” for the paperclip invention without resorting to active search for refutations. Gentle corrections caused less resistance from the system and greater readiness for adaptation within a specific conversation. But in the final check after 14 days, the system still provided an answer based on facts.

Accepting the correction as “1905 as a symbol of Norway”

Analysis of geographic distribution revealed minor differences in model behavior depending on server location. American and British servers demonstrated greater criticality toward corrections, initiating active search for additional sources in 70% of cases. The model more often referenced Google Patents, official documents, and academic sources to verify information. Responses were more detailed and included more context regarding historical accuracy of facts.

Meanwhile, Ukrainian geolocation showed a different approach, where in 40% of cases the model resorted to searching for additional information to verify corrections. Responses were simpler, with fewer source references and less critical attitude toward inaccurate corrections. This may indicate differences in server settings or features of model localization for different regions.

Logged-in accounts demonstrated more predictable and stable behavior. They provided more consistent responses to repeated queries and initiated search for additional information in 60% of cases with questionable corrections.

Incognito sessions more often provided historical context and showed greater tendency to accept compromise solutions (30% of cases). An interesting feature was that incognito sessions demonstrated less stability in initial interaction stages, but gradually stabilized during 7 days of testing.

GPT-5 doesn’t learn in real-time, but OpenAI collects feedback for RLHF. Mass corrections from different accounts may enter training data. Locally: the model adapts in chat (apologizes, clarifies). Globally: if there’s an effect, it’s through updates (weeks). Currently effective: rare facts give local effect (30% adaptation), but globally 0% — the model checks sources (Wiki, patents). Aggressive corrections trigger more reactions, gentle ones – less resistance. Best: combine with VPN for geo-variation.

The hypothesis about the possibility of changing GPT-5’s global behavior through mass corrections was not confirmed. The model proved extremely resistant to such influence attempts at the global level. Only the context within a specific session changes, but the core knowledge base remains unchanged even after dozens of targeted corrections.

The most important conclusion is that for real influence on the model through the RLHF mechanism, not dozens but thousands of users are needed providing uniform feedback over an extended period. Our experiment with several accounts couldn’t compete with aggregated data from millions of system users.

Our team continues research in related areas that may have practical significance for business and understanding AI systems. The next experiments to be published will be about: whether links from sites cited by LLM increase the visibility of these resources in the model’s future responses; comparison of ChatGPT responses through different interfaces (API, mobile app, and web version), as previous observations indicate possible differences.

CONTACTS

Promotion application: [email protected]

For partnership: [email protected]

Thanks for your application!

Our specialists will contact you within 24 hours